AI Innovations Transforming Infrastructure Management Practices

Outline:

– Setting the stage: Why automation, predictive analytics, and digital twins now

– Automation: Where it fits, what it changes, and how to scale it safely

– Predictive analytics: Data, models, and operational value

– Digital twins: From static models to living systems

– Putting it together: Architecture, governance, and an actionable roadmap

The New Operating System for Infrastructure

Infrastructure managers are facing a familiar paradox: growing demand, aging assets, and tighter budgets. Three AI-enabled capabilities—automation, predictive analytics, and digital twins—are reshaping this equation by compressing time-to-action, reducing uncertainty, and improving coordination across departments. Think of them as a new operating system for physical operations: automation speeds routine execution, predictive analytics tunes decisions with probabilities rather than gut feel, and digital twins provide a shared, living picture of reality. Together, they transform scattered data points into timely interventions that move the needle on service quality, safety, and cost.

The timing is not incidental. Sensors are more affordable, connectivity is more reliable, and data storage costs have declined steadily over the past decade. At the same time, asset complexity has increased: distributed energy resources, smart water meters, adaptive traffic signals, and condition-monitoring devices generate continuous streams of information. Without tools that can act, anticipate, and visualize at scale, teams drown in alerts and spreadsheets. When aligned correctly, these three innovations deliver practical outcomes seen across multiple sectors: reductions in unplanned downtime by double-digit percentages, lower energy consumption through continuous optimization, and faster incident response measured in minutes rather than hours.

For decision-makers, the key is to treat the triad as interdependent rather than as disconnected projects. A digitized workflow without predictive intelligence simply accelerates the wrong tasks. A predictive model without automation may recommend actions that never happen on time. A digital twin without trustworthy data becomes a beautiful dashboard that nobody uses. The highest returns appear when organizations synchronize them around clear business objectives and measurable service levels. Useful early goals include:

– reducing emergency work orders as a share of total maintenance

– improving mean time between failures for critical assets

– trimming energy intensity per delivered unit of service

– cutting time-to-diagnosis for incidents across shifts

These outcomes are tangible, auditable, and align technical teams with board-level priorities.

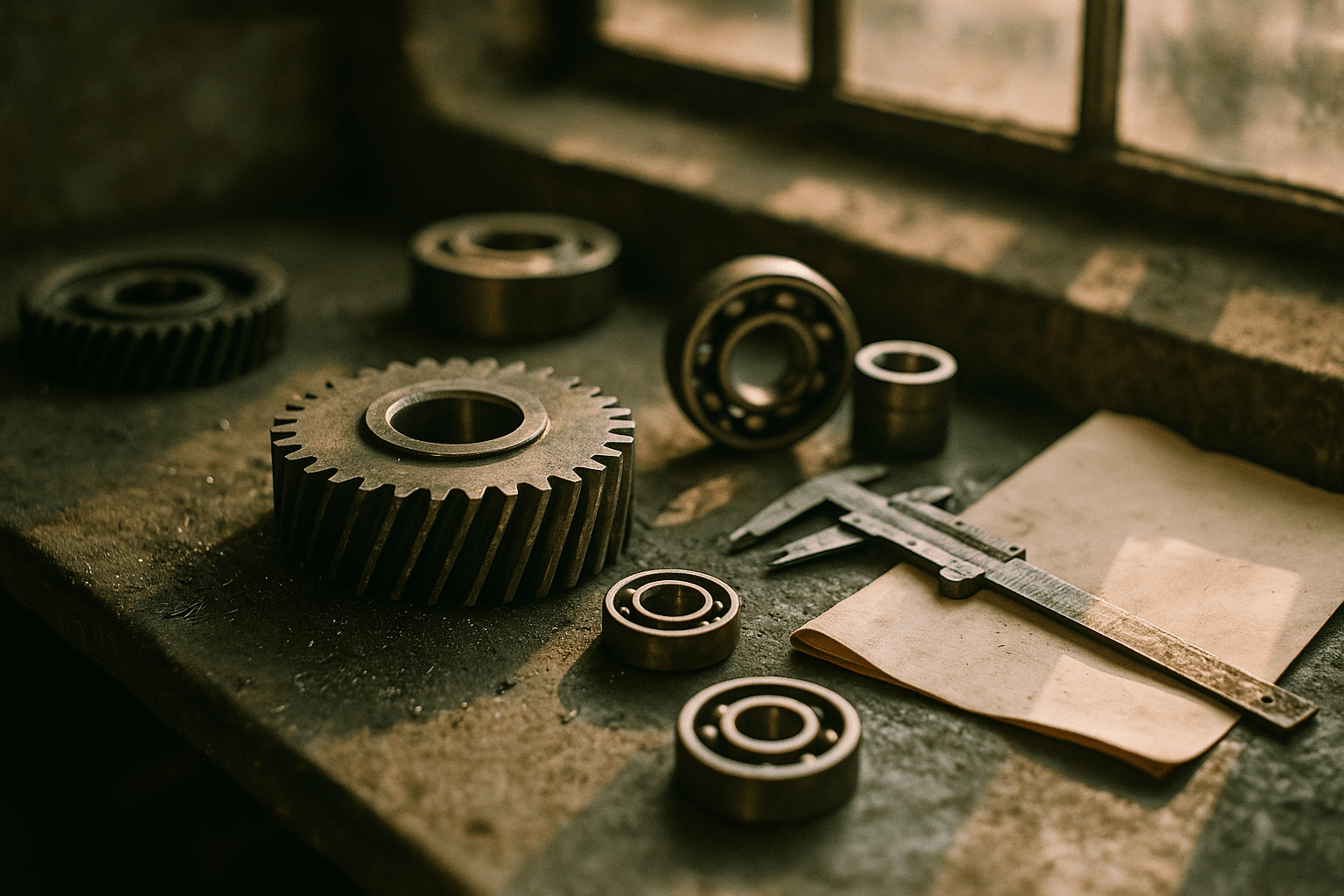

Automation in Infrastructure: From Workflows to Field Execution

Automation in infrastructure covers far more than scripts and control loops. It spans back-office orchestration, network operations, and field execution. On one end, rules and event-driven workflows handle repetitive tasks—validating data, scheduling crews, generating compliance reports, and reconciling sensor anomalies. On the other end, machine-guided systems support dynamic setpoint tuning for plants, automated incident triage in control rooms, and semi-autonomous inspections using cameras and other sensors. The practical effect is to shorten the path from signal to action while maintaining traceability for safety and regulatory audits.

There are two broad flavors of automation and they often complement each other:

– Deterministic, rules-based flows: reliable, explainable, and suitable for well-defined tasks or regulatory steps.

– Adaptive, data-informed flows: capable of learning from patterns and changing conditions, but requiring careful guardrails.

A useful comparison: rules-based steps excel at “always do this when X happens,” while adaptive approaches shine in “choose among options A, B, or C to meet current operating constraints.” A balanced design typically places deterministic controls on safety-critical boundaries and lets adaptive logic manage efficiency and prioritization.

Well-scoped automation can deliver meaningful gains. Industry benchmarks commonly report 20–40% cycle-time reductions for routine administrative work, 10–20% lower energy consumption from continuous tuning in facilities, and materially faster fault isolation in networks where automated runbooks initiate diagnostics the moment thresholds are crossed. Benefits expand when automation is connected to downstream logistics—automatically checking spares inventory, reserving equipment, and notifying crews with clear procedures and location data. Risks exist, and they are manageable: drift from poorly maintained rules, alert fatigue from over-automation, and handoff failures between automated and manual steps. These are mitigated by design reviews, progressive rollouts, and clear kill switches that return control to operators instantly during anomalies.

To scale responsibly, organizations can develop a catalog of reusable automations with owners, versioning, and performance metrics. Good hygiene includes:

– documenting prerequisites and expected outcomes

– embedding tests for edge conditions

– monitoring latency and success rates

– keeping humans-in-the-loop for exceptional cases

The result is an automation fabric that accelerates work without sacrificing resilience or accountability.

Predictive Analytics: Anticipation as a Service

Predictive analytics turns raw telemetry and historical records into forward-looking guidance on failures, demand, and risk. In infrastructure, common targets include failure prediction for pumps, transformers, and valves; leak detection in water networks; congestion forecasting for roads; and energy demand shaping for facilities. The core workflow is consistent: gather data, engineer features, train models, validate results, and operationalize outputs so they reach the right team in time to matter. The technical elements matter, but operational fit matters more—alerts must be credible, interpretable, and tied to clear actions, or they will be ignored during busy shifts.

Metrics guide trust. Precision and recall quantify correctness; lead time measures how early a model warns; uplift compares outcomes with and without predictions. Organizations often target thresholds such as:

– minimum precision of 0.8 for costly interventions

– recall above 0.7 for safety-related events

– lead time sufficient to schedule crews and procure parts

These are starting points, not rigid rules, and should be tailored to asset criticality and service-level obligations. Experience shows that models with moderate accuracy can still create substantial value if integrated with smart triage and prioritization logic.

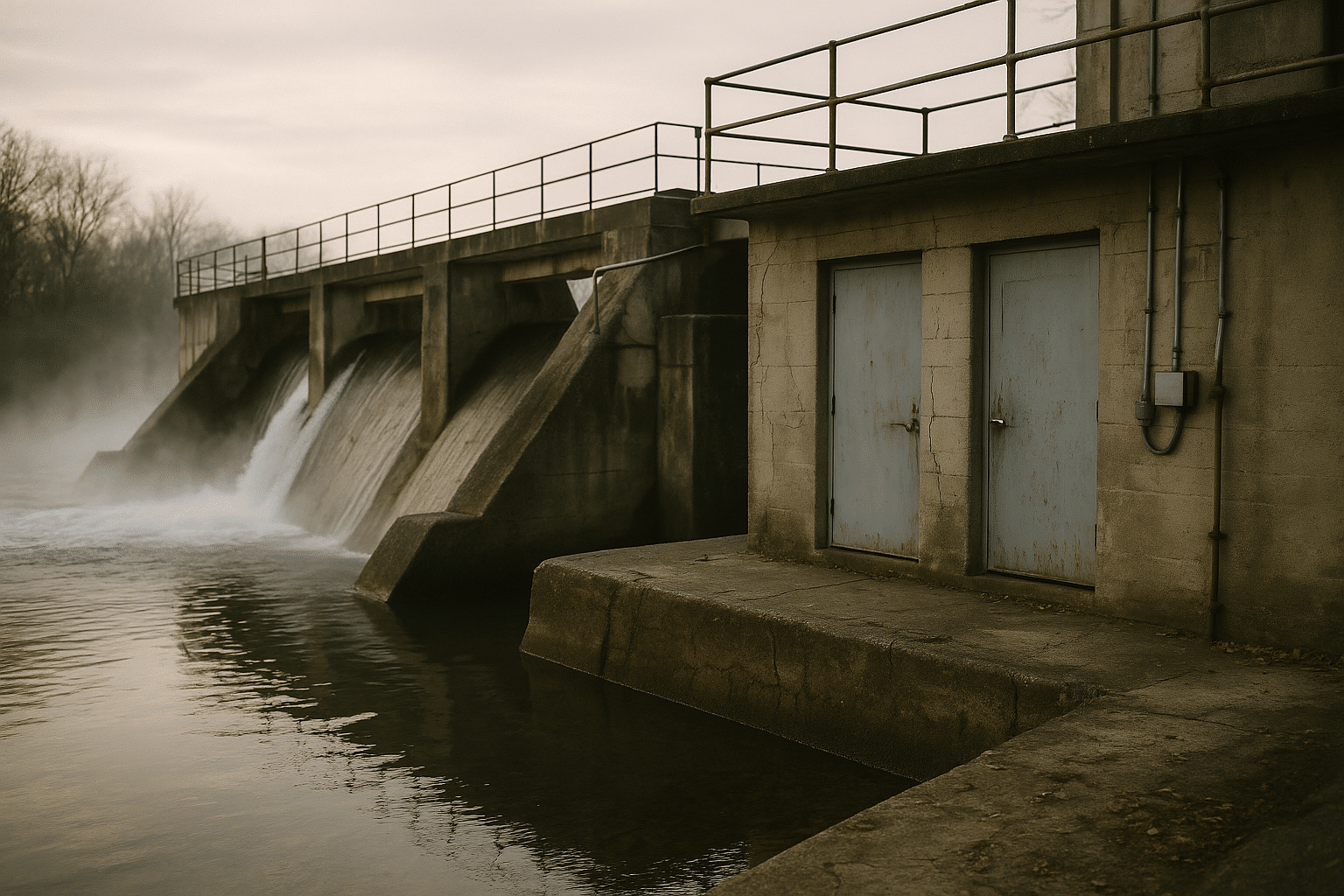

The economic case is tangible. Predictive maintenance programs often report 20–50% reductions in unplanned downtime and 10–30% savings in maintenance spend through fewer emergency callouts and optimized spare parts. In water systems, predictive leak detection can reduce non-revenue water through early repairs and pressure balancing. In grid operations, forecasting helps reconfigure topology or schedule maintenance during low-demand windows. The secret is not exotic algorithms; it is disciplined data management and feedback loops that continuously retrain models with newly labeled outcomes.

Comparing approaches clarifies choices. Time-based maintenance is simple and compliant but can over-service assets. Condition-based maintenance relies on thresholds and is a step forward yet still reactive. Predictive approaches weigh multiple signals—vibration, temperature, load cycles, environmental conditions—and assign probabilities to future events, enabling staged responses from “inspect soon” to “replace at next outage.” Pairing predictions with automated work orders, spare reservations, and pre-approved procedures turns foresight into consistent action. Done right, prediction becomes an always-on service for operations, not an isolated data project.

Digital Twins: Living Context for Real-World Decisions

A digital twin is a dynamic representation of an asset, process, or network that stays synchronized with reality. It fuses geometry, topology, operational data, and business context, enabling teams to see not only what is happening but also where and why. Unlike static design models, a mature twin can ingest telemetry, capture configuration changes, simulate scenarios, and feed outcomes back into operations. For infrastructure managers, this means a single pane of truth where engineering, operations, finance, and compliance can coordinate decisions with shared facts.

Use cases are diverse. Facility twins help optimize energy by testing setpoint changes virtually before applying them. Network twins in water and power visualize flows and constraints, highlight risks under varying demand, and identify the least-cost path to meet service targets. Transportation twins speed incident management by mapping sensor alerts to physical locations and evaluating route alternatives. During extreme weather, a twin can simulate asset stress, crew access, and inventory availability to guide staging decisions. Under routine conditions, it becomes a training ground where teams rehearse responses without risking service interruptions.

Maturity grows in layers:

– Descriptive: the twin reflects the as-is state with reliable metadata.

– Diagnostic: it explains anomalies by correlating signals and events.

– Predictive: it forecasts behaviors under likely conditions.

– Prescriptive: it recommends actions and can trigger automated steps with guardrails.

Progressing through these stages requires disciplined data governance, change management, and an integration plan that keeps the twin current as assets and networks evolve. A common pitfall is attempting a sweeping, all-at-once build. More durable results start with high-value slices—such as a substation, a treatment plant, or a corridor—then scale.

Value stems from reduced rework, faster issue resolution, and improved coordination. Teams report shorter time-to-diagnosis when context is immediate and visual, along with measurable gains in energy performance when optimization runs continuously rather than seasonally. Importantly, twins lower organizational friction: planners stop emailing outdated schematics, operators see how upstream changes affect their metrics, and finance gains transparent line-of-sight from interventions to outcomes. The twin becomes the meeting place where assumptions are tested against data.

From Vision to Practice: Integrating the Trio with Governance and a Roadmap

Automation, predictive analytics, and digital twins yield the strongest results when woven into a coherent architecture and governed with clarity. The technical blueprint typically includes four layers: data ingestion and quality controls; a semantic layer that standardizes asset and event models; analytic services for prediction and simulation; and an orchestration layer that drives workflows, alerts, and change logs. Security and privacy run horizontally across all layers, with strict identity and access rules, audit trails, and incident response playbooks. Rather than a monolith, aim for modular services that can grow without constant re-platforming.

Practical governance addresses who can change what, how models are validated, and how operations confirm success. A lightweight model registry is useful for tracking versions, performance, and approval status. A change-advisory routine ensures that automated actions affecting safety-critical systems meet documented criteria. Role clarity is equally important: operations define acceptance thresholds and intervention playbooks, data teams maintain pipelines and models, and engineering validates that simulated actions are physically plausible. To avoid drift, every automated or predictive decision should be end-to-end observable—inputs, rules, outcomes, and the human or automated follow-up.

An incremental roadmap helps de-risk investment:

– Start with a narrow, high-impact slice and publish baseline KPIs.

– Automate the last mile first: ensure that insights trigger precise actions.

– Wrap insights in a digital twin view so context is always visible.

– Expand data coverage and prediction scope only after closing the feedback loop.

– Institutionalize learning by scheduling quarterly reviews of what worked, what failed, and why.

This approach compounds value while keeping stakeholders aligned.

Financials and risk merit transparent framing. Early pilots can fund themselves through avoided outages, energy savings, and reduced emergency work. Sensible assumptions might include conservative adoption curves, model degradation allowances, and contingency buffers for training and change management. On the risk side, treat model bias, automation failure modes, and cyber threats as addressable engineering problems: test before deploy, isolate safety-critical controls, and maintain manual override paths. With these foundations, the trio becomes more than technology; it becomes a repeatable method for delivering reliable, efficient, and resilient infrastructure.