Overview of Machine Learning Model Deployment Services

Outline and Reading Guide

Machine learning deployment has matured from a niche engineering chore into a disciplined practice with its own playbooks, metrics, and governance norms. This article offers a structured journey through three pillars—deployment, automation, and scalability—so teams can transform experimental models into dependable services. Whether you’re a data scientist planning your first release or a platform engineer refining an existing stack, the goal here is practical clarity: patterns you can reuse, pitfalls worth avoiding, and criteria to help you choose between viable options rather than chase silver bullets.

To help you navigate, here is the roadmap we will follow, along with what you should expect to take away from each part:

– Deployment: a tour of service shapes (batch, real-time, streaming), packaging strategies, rollout methods, and observability must-haves.

– Automation: how pipelines, testing, and policy guardrails reduce toil and increase reliability, with examples of checks that actually catch issues.

– Scalability: capacity planning, autoscaling signals, performance tuning (batching, caching, model optimization), and resilient traffic management.

– Conclusion: a pragmatic path you can adapt for your organization, with a lightweight checklist and a maturity ladder to guide next steps.

Before we dive in, a few working definitions keep us aligned. By “deployment services,” we mean the infrastructure and processes that accept requests, run inference, and return results under defined service-level objectives. Automation covers the repeatable pathways that move code and models from commit to production, including retraining and validation. Scalability refers to the system’s ability to meet demand growth while holding latency, error rates, and costs within targets. Thinking in these terms keeps discussions concrete: what is the boundary of the service, how does change flow through it, and how will it behave on a busy day?

Finally, consider your constraints while reading. Some teams optimize for single-digit millisecond latency, others for high-throughput batch scoring overnight; some prefer immutable weekly releases, others continuous delivery with tight guardrails. The comparisons ahead highlight trade-offs rather than declare a one-size-fits-all default. As you read, note the assumptions that match your environment—data freshness needs, peak patterns, regulatory obligations—and you will leave with a template that maps to your reality.

Deployment: From Notebook to Reliable Service

Moving from a training notebook to a reliable service is a shift from artifacts to agreements. The artifact is the model plus its code and dependencies; the agreements are the service contract: inputs, outputs, latency targets, and error budgets. Start by choosing the service shape that fits your use case. Batch deployment excels when requests are predictable and freshness tolerates minutes to hours; it often achieves high throughput at low cost. Real-time deployment suits interactive experiences, where p95 latency under roughly 100 ms keeps users engaged. Streaming inference sits in between, processing events continuously with bounded lag and steady resource usage. The right shape reduces complexity before any optimization begins.

Packaging determines reproducibility and portability. Containerized images with pinned dependencies, system libraries, and a clear entry point reduce “works on my machine” incidents. Model artifacts should be versioned immutably, with metadata that records training data hashes, feature definitions, and evaluation metrics. A minimal serving layer—input validation, feature transformation parity with training, and careful threading or async handling—keeps the surface area manageable. Common issues to guard against include feature skew (the live features differ from training), locale or encoding mismatches, and nondeterminism due to random seeds or hardware-specific kernels.

Release strategies trade speed against risk. Blue–green deployment swaps traffic between two identical environments, simplifying rollback at the cost of duplicate capacity. Canary releases shift a small percentage of traffic to the new version, watching key indicators before gradual ramp-up. A/B testing compares model variants for business outcomes while preserving guardrails for latency and errors. Useful indicators include request rate, p50/p95 latency, timeouts, 4xx/5xx error ratios, and application-specific metrics such as recommendation click-through or fraud catch rate. A straightforward rule like “pause rollout if p95 grows by 20% or error ratio doubles for 5 minutes” turns folklore into policy.

Observability is the compass for production health. Logs capture request context and error narratives; metrics track rates, durations, and saturation; traces follow requests across dependencies to pinpoint bottlenecks. Add lightweight payload sampling to compare inputs and outputs across versions and detect drift early. Record both infrastructure signals (CPU, memory, concurrency) and model signals (confidence scores, calibration curves, class distribution). A small investment in structured events—request received, features computed, model invoked, response returned—pays dividends when investigating a spike in latency or a surprising prediction pattern.

Automation: Pipelines, CI/CD, and Reliability by Design

Automation turns fragile heroics into dependable routines. Begin with a clear pipeline that links data ingestion, feature engineering, training, evaluation, packaging, and deployment. Each stage should emit artifacts and reports that the next stage consumes. Triggering can be schedule-based (nightly retrains), event-based (new data volume crossing a threshold), or manual (approval after review). Gate each transition with checks that reflect real risks: schema validation, data freshness windows, distribution shifts, and regression tests for serving code. If a model’s lift depends heavily on a narrow slice of data, add targeted tests for that slice rather than rely only on aggregate metrics.

CI/CD for ML blends software practices with model-specific validation. A typical path includes static analysis and unit tests for preprocessing code, fast integration tests that spin up a minimal serving instance, and performance smoke tests to catch latency regressions. On the model side, compare new metrics to a baseline with tolerance bands—improvements within a noisy range should not trigger flips. Infrastructure-as-code keeps environments reproducible; parameterize environment differences so confidence gained in staging transfers to production. Artifact promotion (from “staged” to “production-candidate” to “production”) clarifies what runs where and who approved it, reducing confusion during incidents.

Drift and data quality deserve first-class automation. Embed sensors that compare feature distributions in production to the training snapshot; flag significant shifts with actionable context like which feature moved and by how much. Validate data against contracts: ranges, null ratios, and categorical vocabularies. Track model calibration over time; even a stable accuracy can mask a deteriorating probability alignment, which affects thresholded decisions. Consider human-in-the-loop checkpoints where the cost of false positives or negatives is high; route uncertain cases for review, and feed the outcomes back into training. This loops learning into the system rather than treating it as a one-time event.

Finally, measure the pipeline itself. Useful delivery metrics include lead time from commit to production, deployment frequency, change failure rate, and mean time to recovery. When these trends improve, teams gain confidence to ship smaller, safer changes. Automation should remove toil without hiding complexity; dashboards that surface queue backlogs, test durations, and flaky steps prevent silent erosion of reliability. The result is a workflow where models move forward with less drama, and rollbacks are rare not because they never happen, but because they’re routine and reversible.

Scalability: Handling Growth Without Surprises

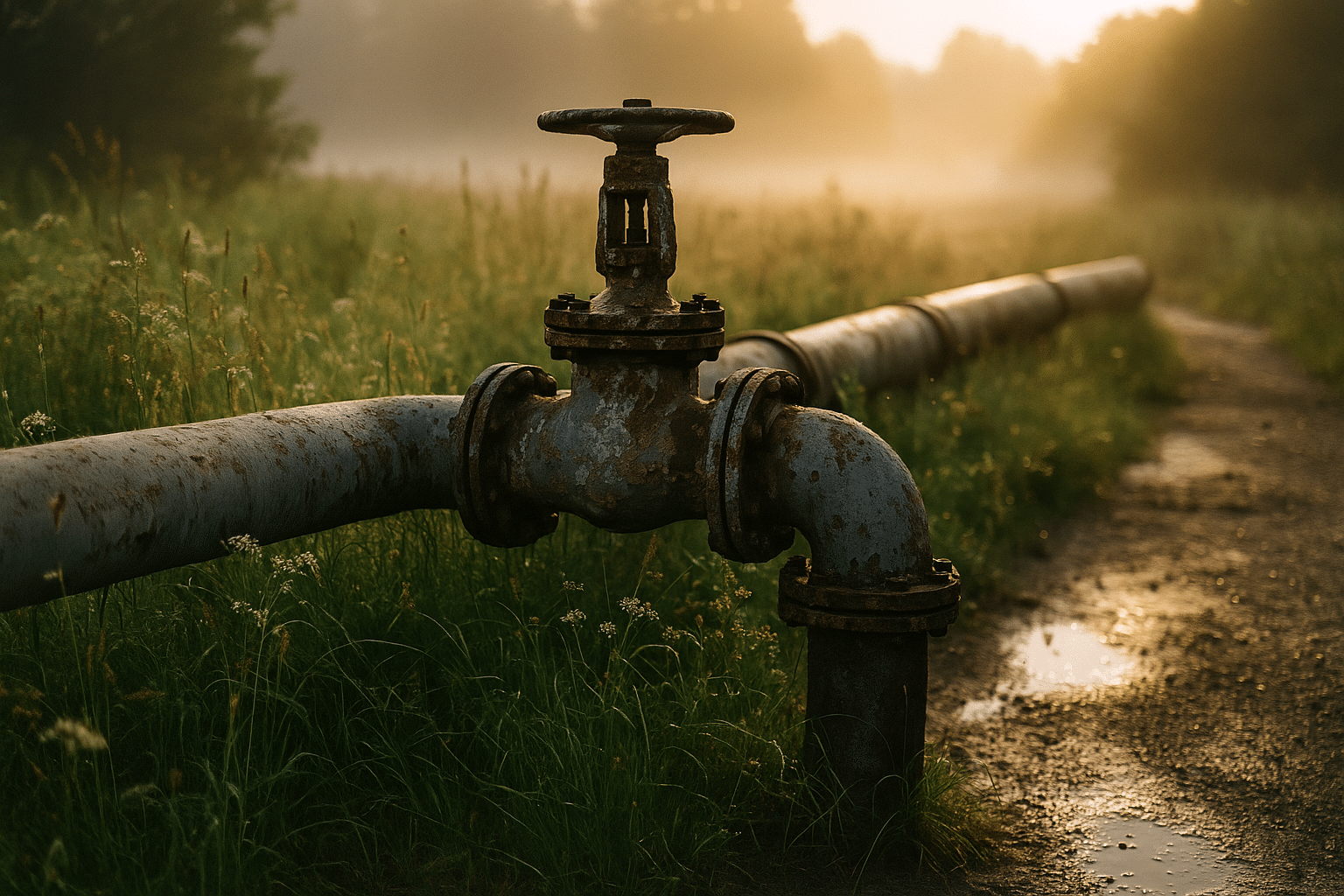

Scalability starts with demand characterization. Is your traffic spiky, diurnal, or steady? Are requests independent, or do they arrive in bursts tied to upstream events? Horizontal scaling—adding instances—usually provides more resilient headroom than vertical scaling, which increases instance size but can hit memory or parallelism ceilings. Autoscaling works well when signals match the bottleneck: CPU for compute-heavy models, memory for large embeddings, concurrency or queue depth for I/O-bound workloads, and request latency as a holistic indicator. Aim for scale-up fast and scale-down cautiously, smoothing oscillations that can otherwise thrash caches and warm pools.

Performance tuning reduces the need for raw scale. Techniques include lightweight model compression, quantization-aware training, and pruning, all evaluated against target accuracy. Batching improves throughput for compatible workloads by amortizing overhead; choose batch sizes that respect latency SLOs. Caching common features or intermediate results can cut repeated computation, especially for personalization where user traits change slowly. Consider warm-start pools to mitigate cold starts, and pin critical assets in memory to avoid disk thrash. As a rule of thumb, measure tail latency separately—users experience the tail, not the average—and treat p95 and p99 as first-class citizens in dashboards and alarms.

Topology matters. Co-locate compute near data to avoid cross-region hops, and keep feature stores consistent to prevent stale reads. For global audiences, multi-region active–active patterns reduce latency and improve resilience, but they introduce consistency trade-offs and operational cost. When a hard consistency guarantee is mandatory, prefer clear ownership per region and explicit routing, rather than hidden replication that surprises downstream consumers. Backpressure and queue limits protect upstream systems when the model service is saturated, while circuit breakers prevent cascading failures by failing fast and recovering gracefully.

Cost is the fourth dimension of scalability. Right-size instance types to match workload profiles, and separate latency-critical paths from background batch jobs to avoid resource contention. Rate limiting protects fairness across tenants; dynamic pricing signals can steer non-urgent workloads to off-peak windows. Observability again earns its keep: tie spend lines to request classes, model versions, and features used. When an optimization lands, verify the effect end to end: lower latency should translate to better user outcomes or lower unit cost, not just prettier benchmarks. Sustainable scale balances performance, reliability, and fiscal discipline.

Conclusion: A Practical Path to Production-Ready ML

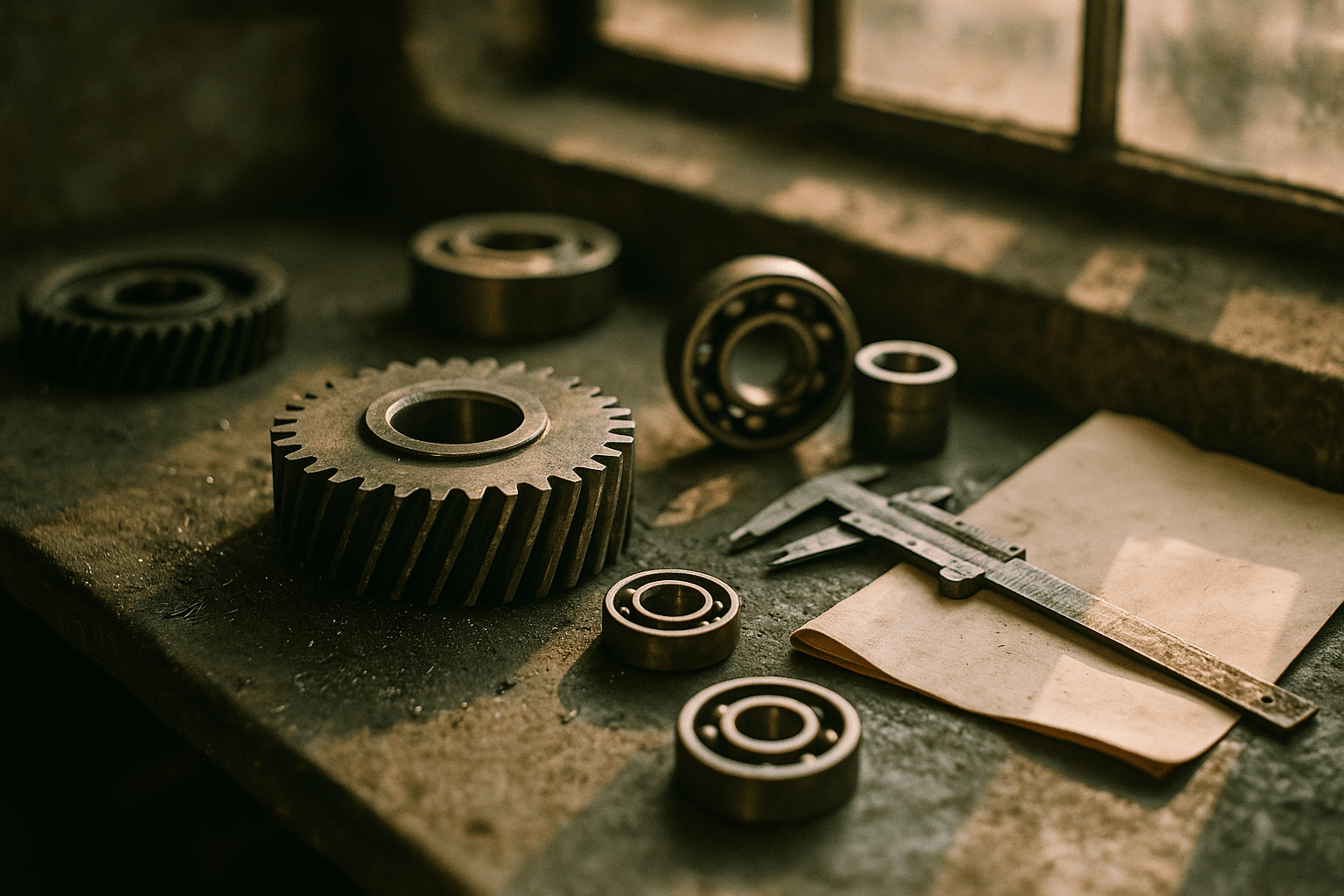

Deployment, automation, and scalability are not isolated disciplines; together they form the gearbox that turns trained models into dependable products. Deployment sets the contract and the runtime guardrails. Automation converts careful practice into repeatable motion, so change accelerates without chaos. Scalability ensures the whole system stays composed under load and budget constraints. The throughline is clarity: define what “good” looks like in terms users feel—latency, accuracy, freshness, cost—and build feedback loops that keep you on target as data and demands evolve.

If you are getting started, avoid the trap of grand rewrites. Establish a simple, observable service: clear input/output schemas, versioned artifacts, and a minimal dashboard for requests, latencies, and errors. Add a small CI path that builds a container, runs unit tests, spins a test service, and packages artifacts with metadata. Introduce a canary rollout policy and write down the stop conditions. From there, pick one scalability improvement that aligns with your profile, such as batching for throughput or warm pools to tame cold starts. Each step should be measurable and reversible.

For teams seeking structure, here is a compact checklist:

– Define service SLOs: p95 latency, error budget, freshness window, and unit cost targets.

– Version everything: data schema, feature definitions, model artifacts, and serving images.

– Automate gates: schema checks, drift detection, calibration monitoring, and performance smoke tests.

– Choose a rollout method with clear abort criteria and automated rollback.

– Instrument for tail latency and saturation; tie metrics to business outcomes and spend.

As your practice matures, consider a lightweight maturity ladder: from ad hoc releases, to standardized packaging and CI, to governed model promotion and automated rollouts, and finally to multi-region resilience with cost-aware scaling. Each rung earns you confidence and reduces cognitive load. There is craft in the details, but the direction is straightforward: small, observable, policy-driven changes that compound. With that mindset, you can ship models that not only predict well in isolation, but also perform reliably in the messy, dynamic world where users live.